On-premise vs Cloud.

Which is the best fit for your business?

Cloud computing is gaining popularity. It offers companies enhanced security, the ability to move all enterprise workloads to the cloud without needing upfront huge infrastructure investment gives much-needed flexibility in doing business, and saves time and money.

Therefore 83% of enterprise workload will be in the cloud and on-premises workloads would constitute only 27% of all workloads by this year, according to Forbes.

But there are factors to consider before choosing to migrate all your enterprise workload to the cloud or choosing an on-premise deployment model.

There is no one size fits it approach. It depends on your business and IT needs. If your business has global expansion plans in place, the cloud provides much greater appeal. Migrating workloads to the cloud enable data to be accessible to anyone with an internet-enabled device.

Without much effort, you are connected to your customers, remote employees, partners, and other businesses.

On the other hand, if your business is in a highly regulated industry with privacy concerns and with the need for customizing system operations then the on-premise deployment model may, at times, be preferable.

To better discern which solution is best for your business needs we will highlight the key differences between the two to help you in your decision-making.

Cloud Security

With cloud infrastructure, security is always the main concern. Sensitive financial data, customers’ data, employees’ data, lists of clients, and much more delicate information are stored in the on-premise data center.

To migrate all this to a cloud infrastructure, you must have conducted thorough research on the cloud provider’s capabilities to handle sensitive data. Renowned cloud providers usually have strict data security measures and policies.

You can still seek a third-party security audit on the cloud providers you want to choose, or even better yet, consult with a cloud security specialist to ensure your cloud architecture is constructed according to the highest security standards and answers all our needs.

As for on-premise infrastructure, security solely lies with you. You are responsible for real-time threat detection and implementing preventive measures.

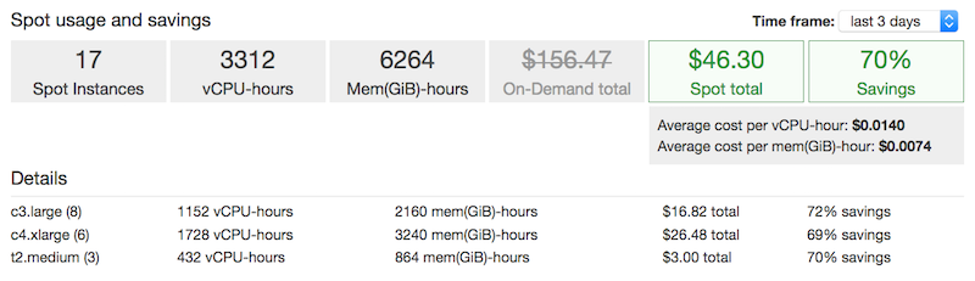

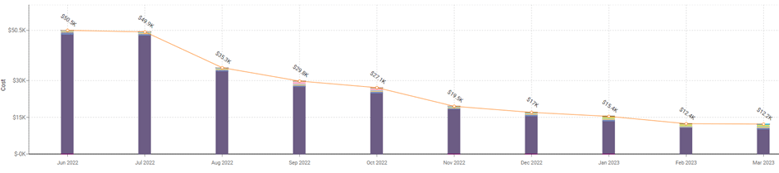

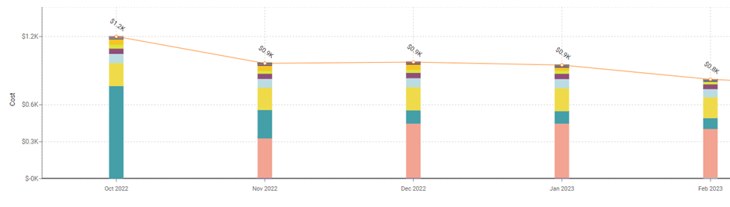

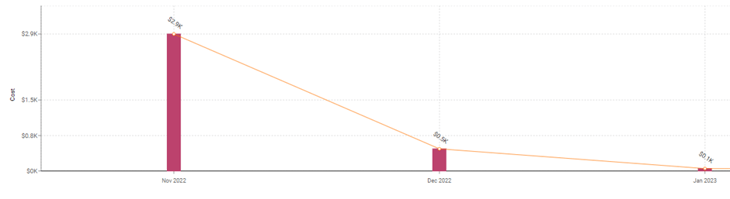

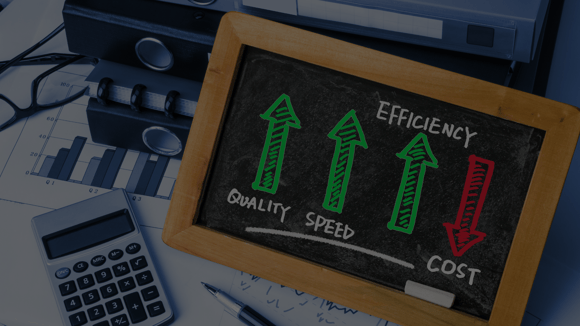

Cost optimization

One major advantage of adopting cloud infrastructure is its low cost of entry. No physical servers are needed, no manual maintenance cost, and no heavy cost incurred from the damage on physical servers. Your cloud providers are responsible for maintaining the virtual servers.

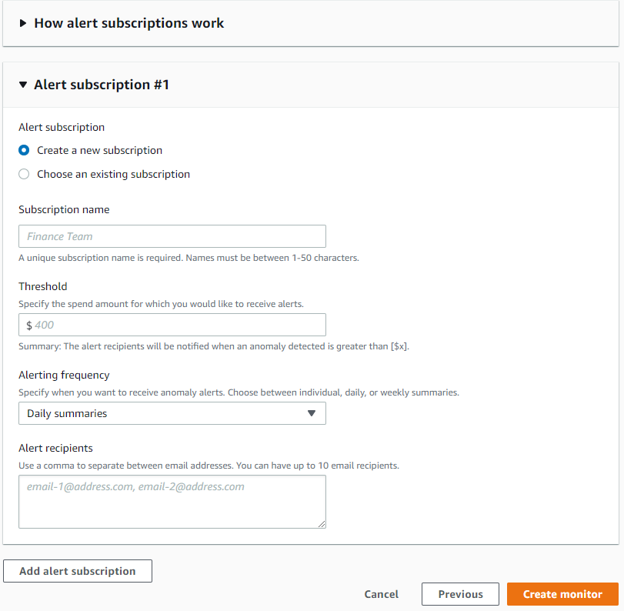

Having said that, Cloud providers use a pay-as-you-go model. This can skyrocket your operational costs when administrators are not familiar with the cloud pricing models. Building, operating, and maintaining a cloud architecture that maximizes your cloud benefits, while maintaining cost control - is not as easy as it sounds, and requires quite a high level of expertise. For that, a professional cloud cost optimization specialist can ensure you get everything you paid for, and are not bill-shocked by any unexpected surplus fees.

On the other hand, On-premise software is usually charged a one-time license fee. On top of that, in-house servers, server maintenance, and IT professionals deal with any potential risks that may occur. This does not account for the time and money lost when a system failure happens, and the available employees don’t have the expertise to contain the situation.

Customization

On-premise IT infrastructure offers full control to an enterprise. You can tailor your system to your specialized needs. The system is in your hands and only you can modify it to your liking and business needs.

With cloud infrastructure, it’s a bit trickier. To customize cloud platform solutions to your own organizational needs, you need high-level expertise to plan and construct a cloud solution that is tailored to your organizational requirement.

Flexibility

When your company is expanding its market reach it’s essential to utilize cloud infrastructure as it doesn’t require huge investments. Data can be accessed from anywhere in the world through a virtual server provided by your cloud provider, and scaling your architecture is easy (especially if your initial planning and construction were done right and aimed to support growth).

With an on-premise system, going into other markets would require you to establish physical servers in those locations and invest in new staff. This might make you think twice about your expansion plans due to the huge costs.

Which is the best?

Generally, the On-premise deployment model is suited for enterprises that require full control of their servers and have the necessary personnel to maintain the hardware and software and frequently secure the network.

They store sensitive information and rather invest in their own security measures on a system they have full control over than have their data move to the cloud.

Small businesses and large enterprises - Apple, Netflix, Instagram, alike move their entire IT infrastructure to the cloud due to the flexibility of expansion and growth and low cost of entry. No need for the huge upfront investment in infrastructure and maintenance.

With the various prebuilt tools and features, and the right expert partner to take you through your cloud journey - you can customize the system to cater to your needs while upholding top security standards and optimizing ongoing costs.

6 steps to successful cloud migration

There are infinite opportunities for improving performance and productivity on the cloud. Cloud migration is a process that makes your infrastructure conformable to your modern business environment. It is a chance to cut costs and tap into scalability, agility, and faster market time. Even so, if not done right, cloud migration can produce the opposite results.

Costs in cloud migration

This is entirely strategy-dependent. For instance, refactoring all your applications at once could lead to severe downtimes and high costs. For a speedy and cost-effective cloud migration process, it is crucial to invest in strategy and assessments. The right plan factors in costs, downtimes, employee training, and the duration of the whole process.

There is also a matter of aligning your finance team with your IT needs, which will require restructuring your CapEx / OpEx model. CapEx is the standard model of traditional on-premise IT - such as fixed investments in IT equipment, servers, and such, while OpEx is how public cloud computing services are purchased (i.e operational cost incurred on a monthly/yearly basis).

When migrating to the public cloud, you are shifting from traditional hardware and software ownership to a pay-as-you-go model, which means shifting from CapEx to OpEx, allowing your IT team to maximize agility and flexibility to support your business’ scaling needs while maximizing cost efficiency. This will, however, require full alignment with all company stakeholders, as each of the models has different implications on cost, control, and operational flexibility.

Cloud Security

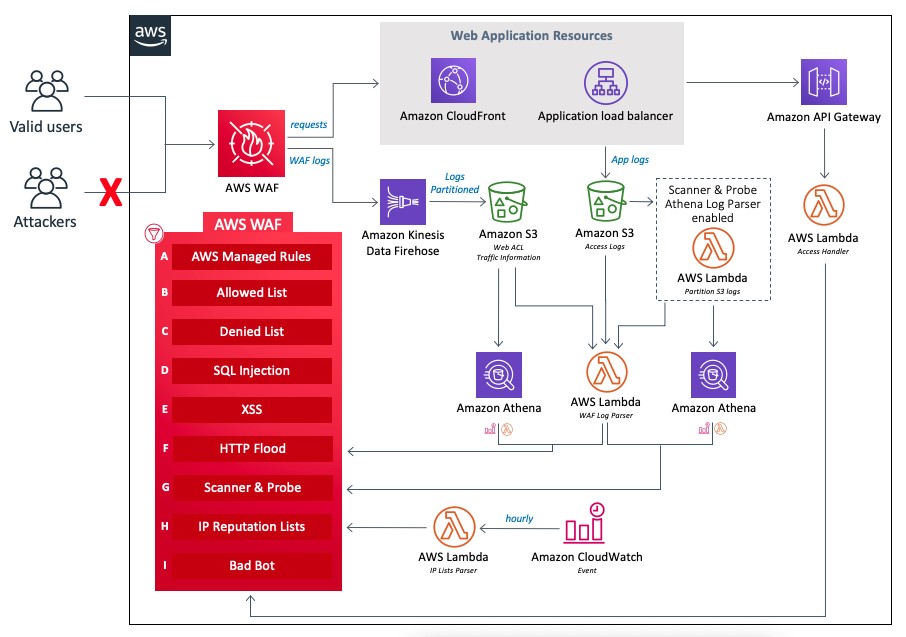

If the cloud is trumpeted to have all the benefits, why isn't every business migrating? Security, that's the biggest concern encumbering cloud migration. With most cloud solutions, you are entrusting a third party with your data. A careful evaluation of the provider and their processes and security control is essential.

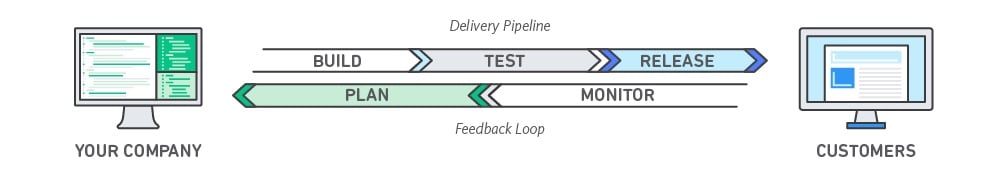

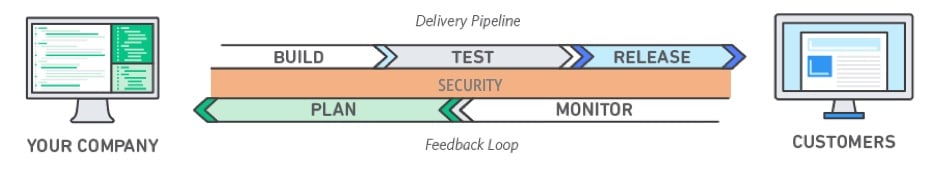

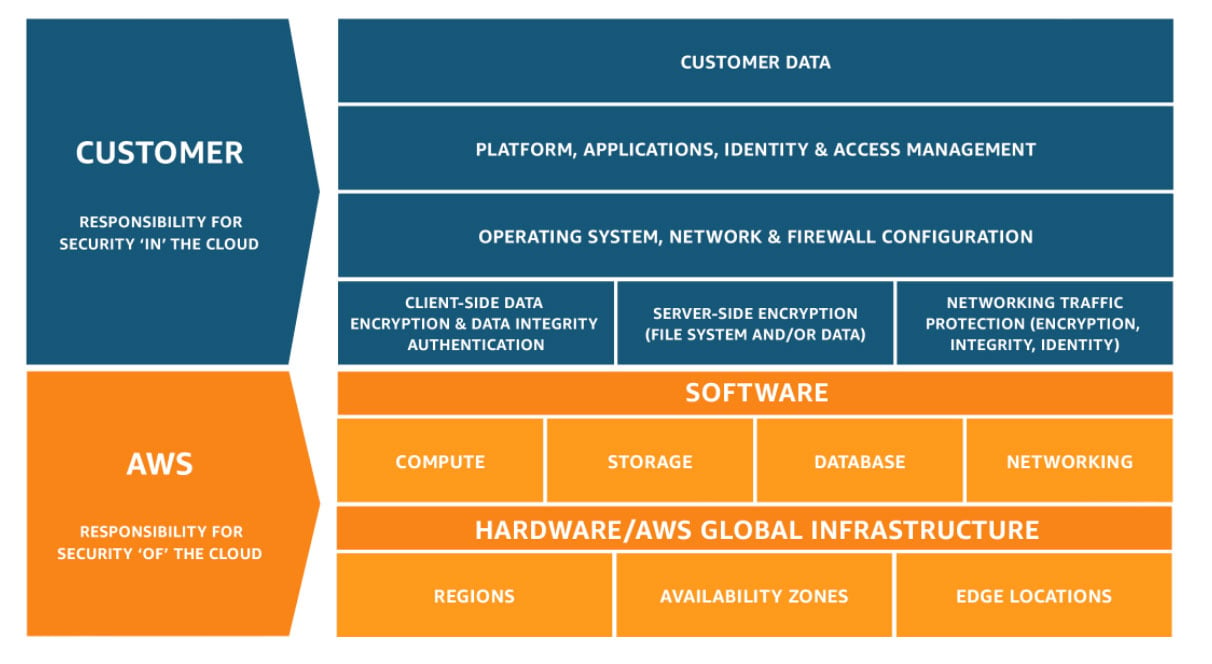

Within the field of cloud environments, there are generally two parties responsible for infrastructure security.

- Your cloud vendor.

- Your own company’s IT / Security team.

Some companies believe that as cloud customers when they migrate to the cloud, cloud security responsibilities fall solely on the cloud vendors. Well, that’s not the case.

Both the cloud customers and cloud vendors share responsibilities in cloud security and are both liable for the security of the environment and infrastructure.

To better manage the shared responsibility, consider the following tips:

Define your cloud security needs and requirements before choosing a cloud vendor. If you know your requirements, you’ll select a cloud provider suited to answer your needs.

Clarify the roles and responsibilities of each party when it comes to cloud security. Comprehensively define who is responsible for what and to what extent. Know how far your cloud provider is willing to go to protect your environment.

CSPs are responsible for the security of the physical or virtual infrastructure and the security configuration of their managed services while the cloud customers are in control of their data and the security measures, they set in place to protect their data, system, networks, and applications.

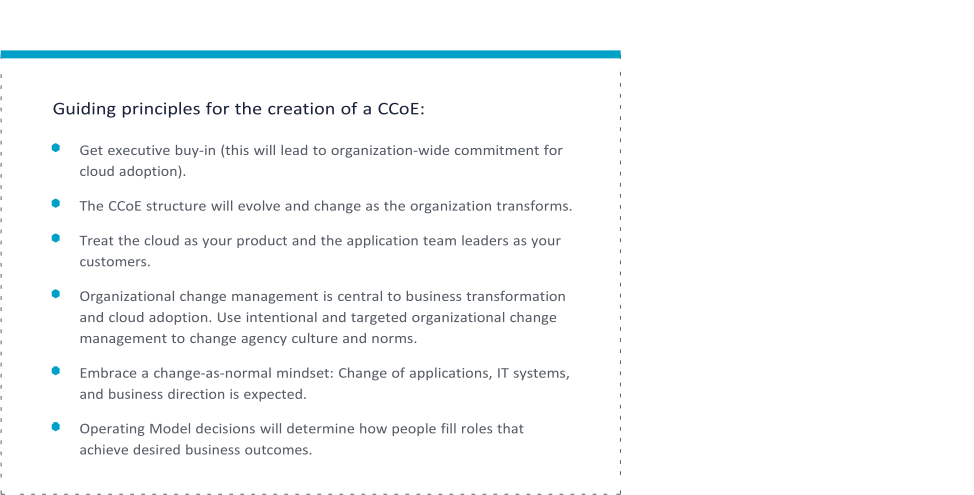

Employees buy-in

The learning curve for your new systems will be faster if there is substantial employee buy-in from the start. There needs to be a communication strategy in place for your workers to understand the migration process, its benefits, and its role in it. Employee training should be part of your strategy.

Change management to the pay-as-you-go model

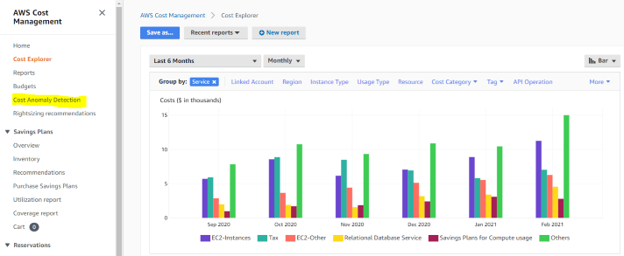

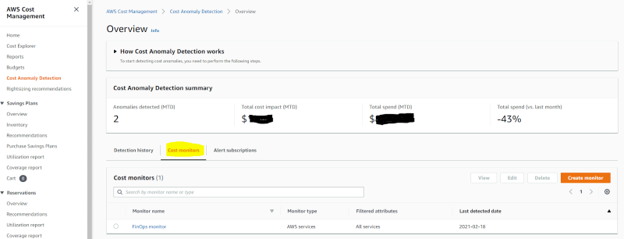

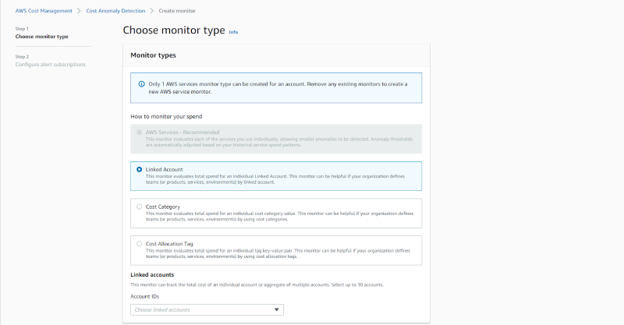

Like any other big IT project, shifting to the cloud significantly changes your business operations. Managing workloads and applications on the cloud significantly differ from how it is done on rem. Some functions will be rendered redundant, while other roles may get additional responsibilities. With most cloud platforms running a pay-as-you-go model, there is an increasing need for businesses to be able to manage their cloud operations in an efficient manner. You’d be surprised at how easy it is for your cloud costs to get out of control.

In fact, according to Gartner, the estimated global enterprise cloud waste is appx. 35% of their cloud spend, is forecasted to hit $21 Billion wasted (!!!) by 2021.

Migrating legacy applications

These applications were designed a decade ago, and even though they don't mirror the modern environment of your business, they host your mission-critical process. How do you convert these systems or connect them with cloud-based applications?

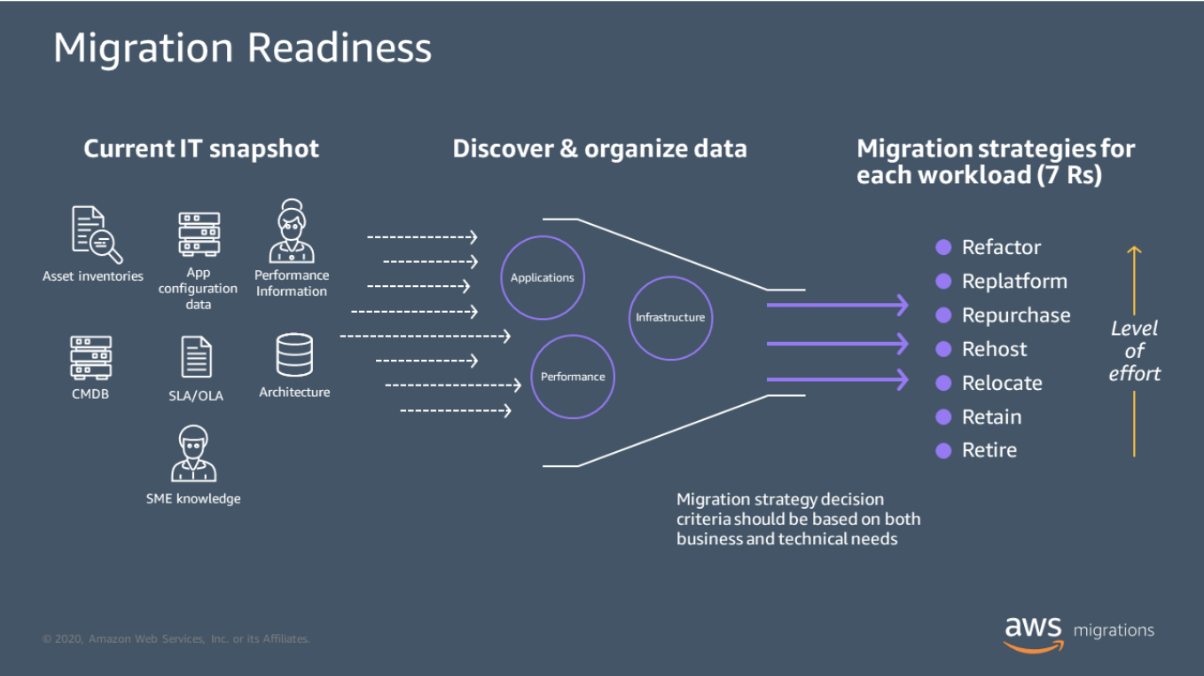

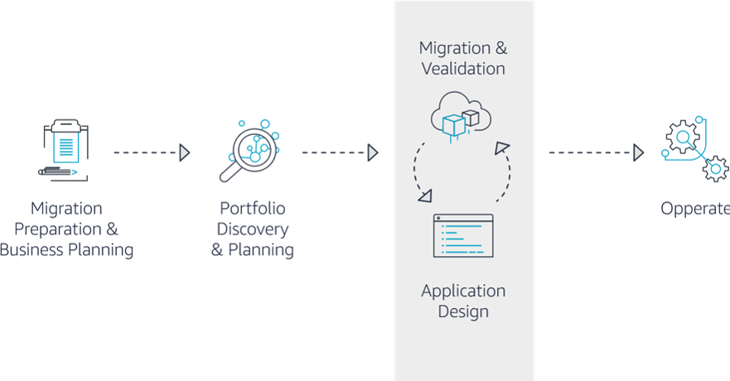

Steps to a successful cloud migration

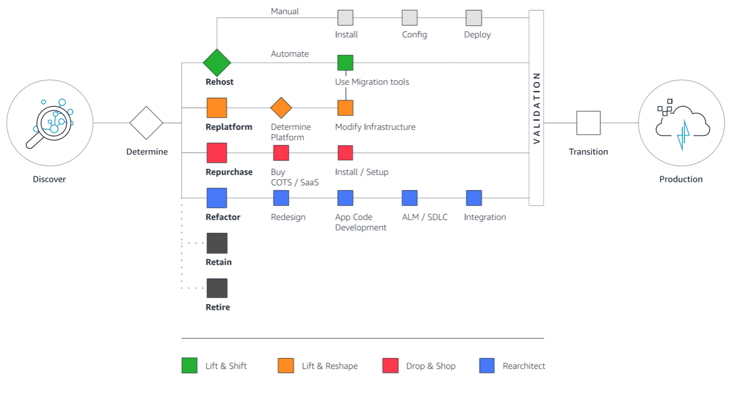

You may be familiar with the 6 R’s, which are 6 common strategies for cloud migration. Check out our recent post on the 6 R’s to cloud migration.

Additionally, follow these steps to smoothly migrate your infrastructure to the public cloud:

- Define a cloud migration roadmap

This is a detailed plan that involves all the steps you intend to take in the cloud migration process. The plan should include timeframes, budget, user flows, and KPIs. Starting the cloud migration process without a detailed plan could lead to a waste of time and resources. Effectively communicating this plan improves support from senior leadership and employees.

Application assessment

Identify your current infrastructure and evaluate the performance and weaknesses of your applications. The evaluation helps to compare the cost versus value of the planned cloud migration based on the current state of your infrastructure. This initial evaluation also helps to decide the best approach to modernization, whether your apps will need re-platforming or if they can be lifted and shifted to the cloud.

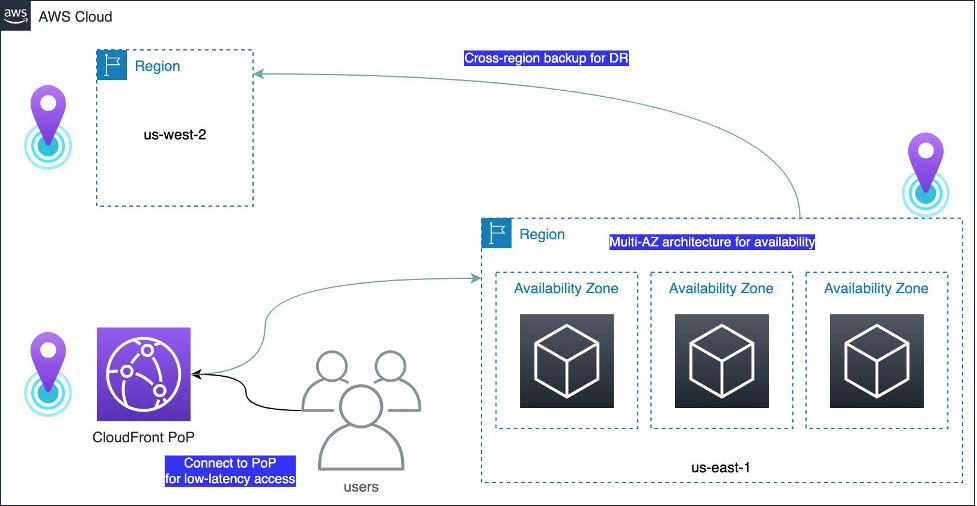

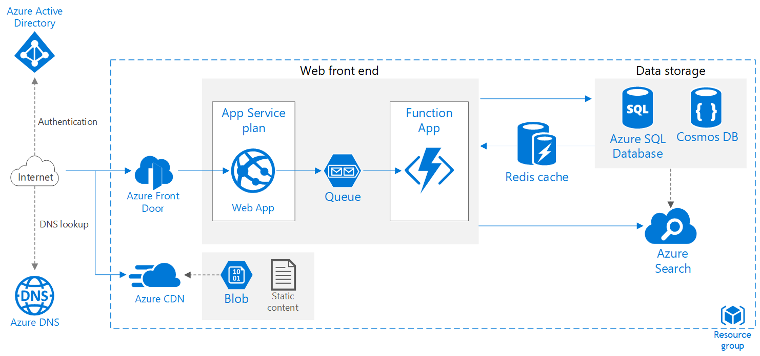

Choose the right platform

Your landing zone could be a public cloud, a private cloud, a hybrid, or a multi-cloud. The choice here depends on your applications, security needs, and costs. Public clouds excel in scalability and have a cost-effective pay-per-usage model. Private clouds are suitable for a business with stringent security requirements. A hybrid cloud is where workloads can be moved between the private and public clouds through orchestration. A multi-cloud environment combines IaaS services from two or more public clouds.

Find the right provider

If you are going with the public, hybrid, or multi-cloud deployment model, you will have to choose between different cloud providers in the market (namely Amazon, Google, and Microsoft) and various control & optimization tools. Critical factors for your consideration in this decision include security, costs, and availability.

There are fads in fashion and other things but not technology. Trends such as big data, machine learning, artificial intelligence, and remote working can have extensive implications for a business's future. Business survival, recovery, and growth are dependent on your agility in adopting and adapting to the ever-changing business environment. Moving from on-prem to the cloud is one way that businesses can tap into the potential of advanced technology.

The key drivers

Investment resources are utilized much more efficiently on the cloud. With the advantage of on-demand service models, businesses can optimize efficiency and save software, infrastructure, and storage costs.

For a business that is rapidly expanding, cloud migration is the best way to keep the momentum going. There is a promise of scalability and simplified application hosting. It eliminates the need to install additional servers, for example, when eCommerce traffic surges.

Remote working is the current sole push factor. As COVID-19 lays waste to everything, businesses, even those that never considered cloud migration before, have been forced to implement either partial or full cloud migration. Employees can access business applications and collaborate from any corner of the world.

Best Practices

Choose a secure cloud environment

The leading public cloud providers are AWS, Azure, and GCP (check out our detailed comparison between the 3) They all offer competitive hosting rates favorable to small and medium-scale businesses. However, resources are shared, like in an apartment building with multiple tenants, and so security is an issue that quickly comes to mind.

The private cloud is an option for businesses that want more control and assured security. Private clouds are a stipulation for businesses that handle sensitive information, such as hospitals and DoD contractors.

A hybrid cloud, on the other hand, gives you the best of both worlds. You have the cost-effectiveness of the public cloud when you need it. When you demand architectural control, customization, and increased security, you can take advantage of the private cloud.

Scrutinize SLAs

The service level agreement is the only thing that states clearly what you should expect from a cloud vendor. Go through it with keen eyes. Some enterprises have started cloud migration only to experience challenges because of vendor lock-in.

Choose a cloud provider with an SLA that supports the easy transfer of data. This flexibility can help you overcome technical incompatibilities and high costs.

Plan a migration strategy

Once you identify the best type of cloud environment and the right vendor, the next requirement is to set a migration strategy. When creating a migration strategy, one must consider costs, employee training, and estimated downtime in business applications. Some strategies are better than others:

- Rehosting may be the easiest moving formula. It basically lifts and shifts. At such a time, when businesses must quickly explore the cloud for remote working, rehosting can save time and money. Your systems are moved to the cloud with no changes to their architecture. The main disadvantage is the inability to optimize costs and app performance on the cloud.

- Replatforming is another strategy. It involves making small changes to workloads before moving to the cloud. The architectural modifications maximize performance on the cloud. An example is shifting an app's database to a managed database on the cloud.

- Refactoring gives you all the advantages of the cloud, but it does require more investment in the cloud migration process. It involves re-architecting your entire array of applications to meet your business needs, on the one hand, while maximizing efficiency, optimizing costs, and implementing best practices to better tailor your cloud environment. It optimizes app performance and supports the efficient utilization of the cloud infrastructure.

Know what to migrate and what to retire

A cloud migration strategy can have all the elements of rehosting, re-platforming, and refactoring. The important thing is that businesses must identify resources and the dependencies between them. Not every application and dependencies needs to be shifted to the cloud.

For instance, instead of running SMTP email servers, organizations can switch to a SaaS email platform on the cloud. This helps to reduce wasted spend and wasted time in cloud migration.

Train your employees

Workflow modernization can only work well for an organization if employees support it. Where there is no employee training, workers avoid the new technology or face productivity and efficiency problems.

A cloud migration strategy must include employee training as a component. Start communicating the move before it even happens. Ask questions on the most critical challenges your workers face and gear the migration towards solving their work challenges.

Further, ensure that your cloud migration team is up to the task. Your operations, design, and development teams are the torchbearers of the move. Do they have the experience and skill sets to effect a quick and cost-effective migration?

To Conclude:

Cloud migration can be a lengthy and complex process. However, with proper planning and strategy execution, you can avoid challenges and achieve a smooth transition. A fool-proof approach is to pick a partner that possesses the expertise, knowledge, and experience to see the big picture of your current and future needs, thus tailoring a solution that fits you like a glove, in all aspects.

At Cloudride, we have helped many businesses attain faster and more cost-effective cloud migrations.

We are MS-AZURE and AWS, partners, and are here to help you choose a cloud environment that fits your business demands, needs, and plans.

We provide custom-fit cloud migration services with special attention to security, vendor best practices, and cost efficiency.

Click here for a free one-on-one consultation call!

.png?width=560&height=200&name=email%20signature%20rethinking%20cost%20control%20(1).png)

.jpg?width=735&height=149&name=image-png%20(1).jpg)

.png?width=2240&name=VS.%20(9).png)

.png?width=735&height=330&name=pasted%20image%200%20(1).png)

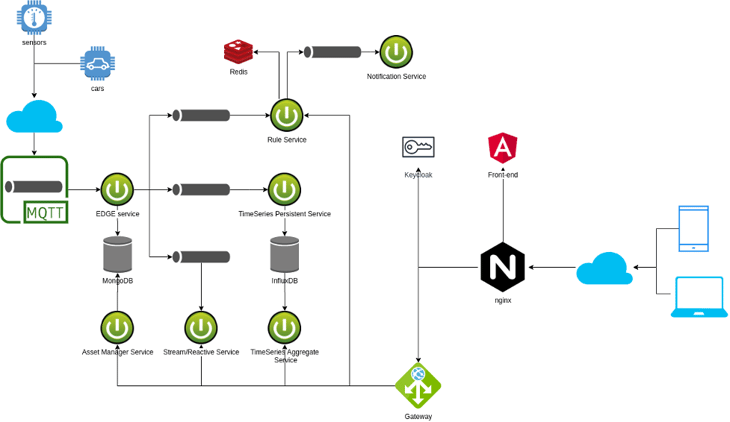

Critical Aspects of an IoT Architecture

Critical Aspects of an IoT Architecture

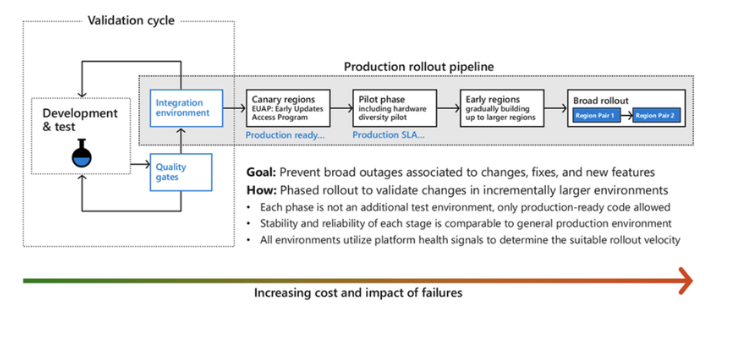

These canary regions are run through tests and end-to-end validation, to practice the detection and recovery workflows that would be run if any anomalies occur in real life. Periodic fault injections or disaster recovery drills are carried out at the region or Availability Zone Level, aimed to ensure the software update is of the highest quality before the change rolls out to broad customers and into their workloads.

These canary regions are run through tests and end-to-end validation, to practice the detection and recovery workflows that would be run if any anomalies occur in real life. Periodic fault injections or disaster recovery drills are carried out at the region or Availability Zone Level, aimed to ensure the software update is of the highest quality before the change rolls out to broad customers and into their workloads.